Why Bigger AI Models Are No Longer Always Better

Introduction

For the last few years, AI progress felt simple: bigger models = better results.

From GPT-3 to GPT-4 and beyond, scale was king.

But in 2025–2026, a quiet shift is happening.

Developers are increasingly choosing Small Language Models (SLMs) over massive LLMs—and winning.

This article explains what SLMs are, why they matter, and when developers should use them instead of large models.

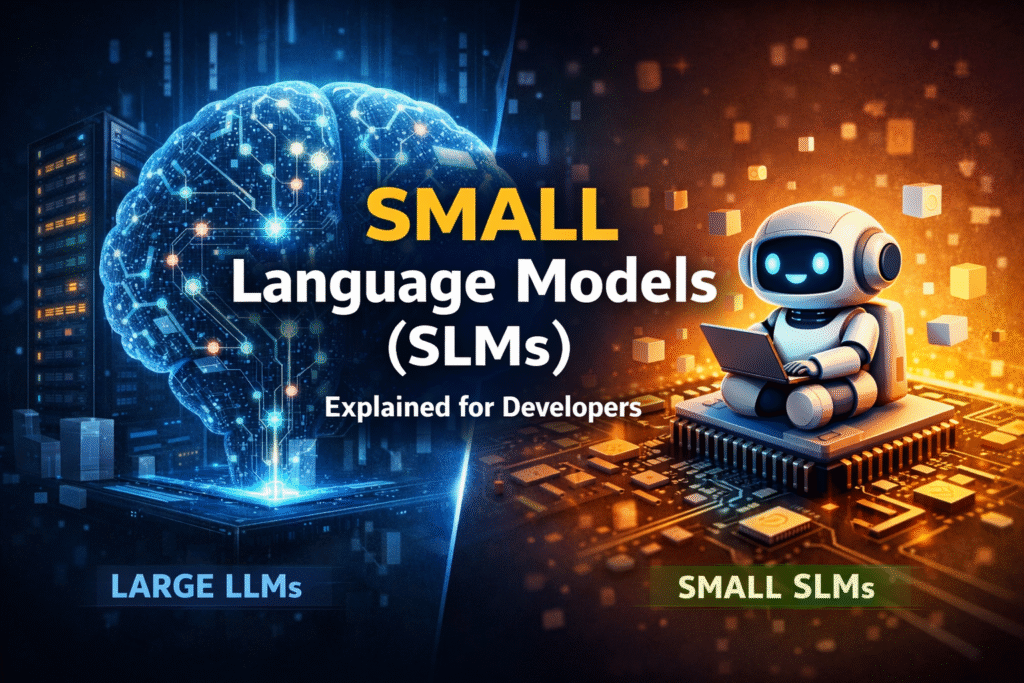

What Are Small Language Models?

Small Language Models (SLMs) are AI models with fewer parameters, typically ranging from:

- 100M → 7B parameters

(compared to 70B–1T+ in large models)

They are:

- Faster

- Cheaper

- Easier to control

- Often good enough for real applications

Examples include:

- Phi family

- Gemma (small variants)

- LLaMA small versions

- Distilled task-specific models

Why Developers Are Moving Away from Giant Models

1. Latency Matters More Than Intelligence

A 300ms response feels instant.

A 4-second response feels broken.

SLMs:

- Run faster

- Can be deployed closer to users

- Work well in real-time apps (chat, autocomplete, search)

2. Cost Is a Silent Killer

Large models:

- Expensive per request

- Cost scales with traffic

- Painful for startups and internal tools

SLMs:

- Can run on CPU or small GPU

- Lower inference cost

- Predictable billing

For many apps, 90% accuracy at 10% cost is a win.

3. Most Apps Don’t Need “General Intelligence”

Ask yourself:

- Do you need the model to write poetry?

- Or just classify tickets, summarize text, extract data?

SLMs shine at:

- Narrow domains

- Repetitive tasks

- Structured outputs

SLMs vs LLMs: Developer View

| Feature | Small Models | Large Models |

|---|---|---|

| Speed | ⚡ Very fast | 🐢 Slower |

| Cost | 💰 Low | 💸 High |

| Accuracy | 🎯 Task-specific | 🧠 General |

| Control | 🔧 High | ❓ Low |

| Deployment | Local / Edge | Cloud |

| Privacy | ✅ Better | ⚠️ Risky |

Real-World Use Cases Where SLMs Win

✅ Backend Automation

- Log analysis

- Error classification

- API response formatting

✅ Mobile & Edge Devices

- Offline AI

- On-device suggestions

- Privacy-first features

✅ Business Tools

- CRM tagging

- Support ticket routing

- Invoice & document parsing

✅ Internal Developer Tools

- Code lint explanations

- PR summaries

- Commit message generation

Architecture: How Developers Use SLMs Today

A modern AI stack looks like this:

Frontend

↓

Node.js / Python API

↓

Small Language Model (local or hosted)

↓

Vector DB / MongoDB / Redis

Key idea:

Use SLMs as workers, not thinkers

Let them:

- Extract

- Classify

- Transform

- Summarize

SLM + LLM Hybrid Pattern (Very Powerful)

Smart teams use both:

- SLM → fast, cheap, frequent tasks

- LLM → complex reasoning or fallback

Example:

User Query

↓

SLM handles request

↓

If confidence < threshold

→ escalate to LLM

This reduces cost without sacrificing quality.

Training & Fine-Tuning: Another SLM Advantage

SLMs are:

- Easier to fine-tune

- Faster to iterate

- Better for domain adaptation

Instead of prompt engineering forever, you can:

- Fine-tune once

- Get consistent outputs

- Reduce hallucinations

Are SLMs Less Accurate?

Yes—but that’s not always bad.

SLMs:

- Hallucinate less in narrow domains

- Follow rules better

- Produce structured output more reliably

Accuracy depends on:

- Task scope

- Data quality

- Prompt design

When NOT to Use Small Models

Avoid SLMs if you need:

- Deep multi-step reasoning

- Creative writing

- Cross-domain knowledge

- Long context understanding

In those cases, large models still win.

The Big Shift: AI Is Becoming Modular

We’re moving from:

“One huge brain does everything”

To:

“Many small brains, each with a job”

SLMs are microservices for intelligence.

Final Thoughts

Small Language Models aren’t a downgrade.

They’re an engineering upgrade.

For developers, especially backend and system designers:

- SLMs = speed + control + cost efficiency

- LLMs = power + reasoning

The future isn’t big vs small.

It’s right model for the right job.